HMP: Hand Motion Priors for Pose and Shape Estimation from Video

Winter Conference on Applications of Computer Vision (WACV), 2024

Enes Duran1,2, Muhammed Kocabas1,3, Vasileios Choutas1,3, Zicong Fan1,3, Michael J. Black1

1Max Planck Institute for Intelligent Systems, Tübingen

2Eberhard Karls University of Tübingen, Germany

3ETH Zürich, Switzerland

Abstract

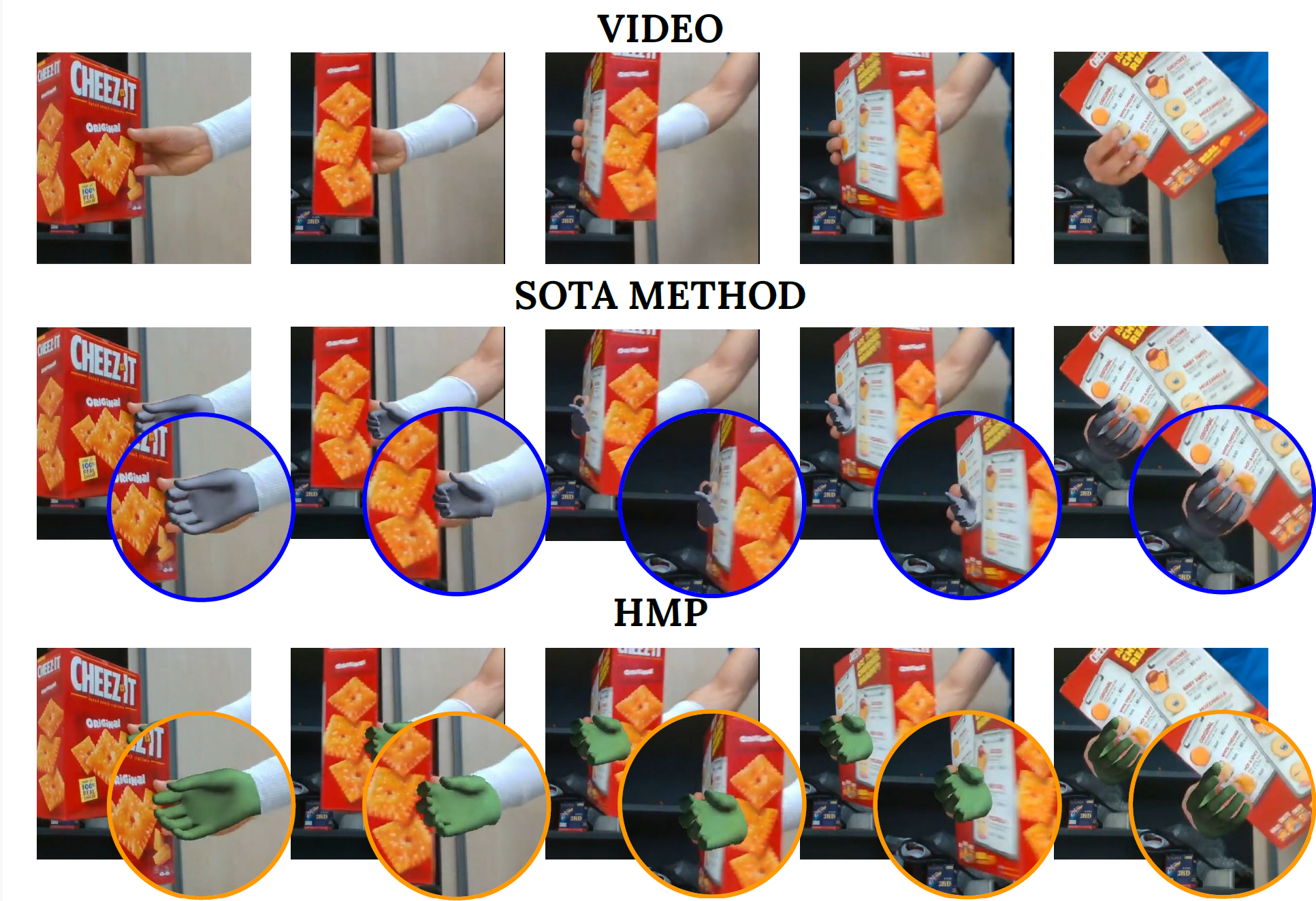

Understanding how humans interact with the world necessitates accurate 3D hand pose estimation, a task complicated by the hand’s high degree of articulation, frequent occlusions, self-occlusions, and rapid motions. While most existing methods rely on single-image inputs, videos have useful cues to address aforementioned issues. However, existing video-based 3D hand datasets are insufficient for training feedforward models to generalize to in-the-wild scenarios. On the other hand, we have access to large human motion capture datasets which also include hand motions, e.g. AMASS. Therefore, we develop a generative motion prior specific for hands, trained on the AMASS dataset which features diverse and high-quality hand motions. This motion prior is then employed for video-based 3D hand motion estimation following a latent optimization approach. Our integration of a robust motion prior significantly enhances performance, especially in occluded scenarios. It produces stable, temporally consistent results that surpass conventional single-frame methods. We demonstrate our method’s efficacy via qualitative and quantitative evaluations on the HO3D and DexYCB datasets, with special emphasis on an occlusion-focused subset of HO3D.

Video

Citation

@InProceedings{Duran_2024_WACV,

author = {Duran, Enes and Kocabas, Muhammed and Choutas, Vasileios and Fan, Zicong and Black, Michael J.},

title = {HMP: Hand Motion Priors for Pose and Shape Estimation From Video},

booktitle = {Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision (WACV)},

month = {January},

year = {2024},

pages = {6353-6363}

}